About Me

Hi! I'm Aryaman Patel, a robotics engineer pursuing my MS in Robotics at Northeastern University. I currently work as a Research Assistant at NEURAL (Northeastern University Robust Autonomy Lab) headed by Prof. David Rosen where my focus is on improving visual SLAM systems for autonomous robots.

I have dedicated significant effort to constructing Factor Graphs for Bundle Adjustment Problems, aiming to achieve computationally efficient mapping in large environments for a multi-camera-based visual-inertial navigation system. Additionally, I developed and implemented a visual place recognition module based on Bag of Words for long-term data association and loop closure detection in a multi-sensor navigation system.

Outside of my core research, I enjoy working on computer vision projects related to image restoration, intrinsic image decomposition, and 3D scene understanding. These include intrinsic image decomposition, shadow decomposition, stereo depth mapping, and more. I am passionate about pushing the boundaries of visual perception to enable reliable autonomy in the real world. You can view some of my work on GitHub or learn more about my current robotics research at NEURAL. Please feel free to reach out to me!

I will complete my MS in Robotics in 2024 and continue pursuing my dream of developing autonomous systems that can operate safely and effectively in complex, unlabeled environments.

Projects

Multi-Camera Visual Interial Navigation Github

This project is in collaboration with the Toyota Research Institute. The developed system is capable of mapping the environment and utilizing the generated maps to achieve precise heading estimation for downstream control tasks. Our team achieved this by fusing data from multiple cameras, an IMU, and GPS to create a significantly sparse map of the environment. The key to achieving this level of precision in localization lies in the accurate Visual Place Recognition System with loop closure, which effectively reduces drift during the mapping process. To enhance computational efficiency over large environments, I implemented a reduced camera model. This model incorporates an elimination procedure akin to the Schur complement, enabling the solution of linear systems with a subset of variables.

Panoptic Segmentation Github

Our project leverages the DETR model for panoptic segmentation in computer vision. We adapt DETRs architecture for accurate 'stuff' and 'things' class distinction and fine-tune it on Cityscapes datasets. Our focus is on real-time applications and robust evaluation metrics like panoptic quality. By optimizing for challenging scenarios, our work aims to advance scene understanding in autonomous driving, extend this model towards Video Panoptic Segmentation.

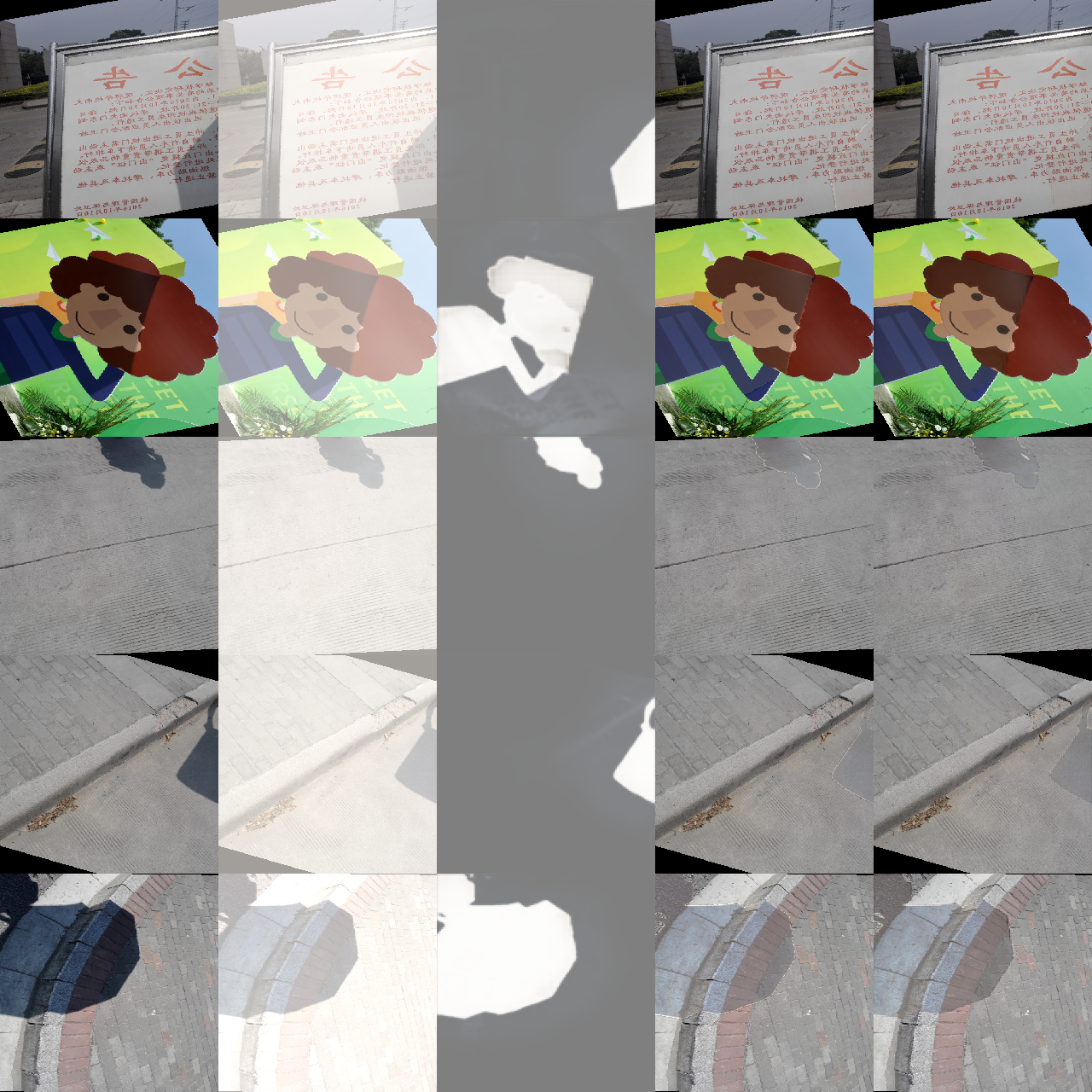

Shadow Image Decomposition Github

This project aims to use deep learning method for shadow removal. Inspired by physical models of shadow formation, they use a linear illumination transformation to model the shadow effects in the image that allows the shadow image to be expressed as a combination of the shadow-free image, the shadow parameters, and a matte layer. The model uses two deep networks, namely SP-Net and M-Net, to predict the shadow parameters and the shadow matte respectively.

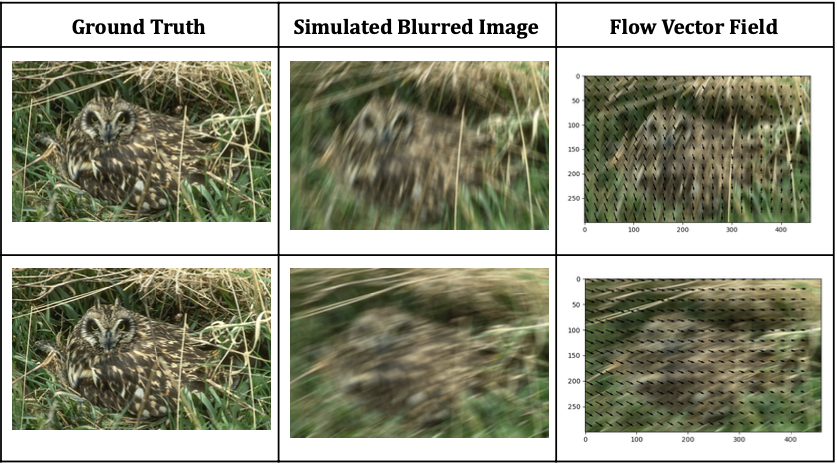

Removing Motion Blur Github

Deblurring images is challenging due to the ill-posed nature of the problem. Traditional methods rely on informative priors, but this paper suggests learning them from the data. By focusing on motion flow maps, a Fully Convolutional Neural Network can effectively recover images. Synthetic data with simulated flows aids in calculating blur kernels for non-blind deconvolution, enhancing image recovery.

Image Mosaicing and Warping Github

This project aims to stitch multiple images together to form a panorama. The process involves feature detection, feature matching, and image warping. The feature detection algorithm used is the Harris Corner Detector. The feature matching algorithm used is the brute force matcher. The image warping algorithm used is the inverse distance weighted interpolation.

Motion Detection Github

In Computer Vision it is often helpful to discard unnecessary parts of images to focus only on essential sections. In the case of doing an analysis of dynamic objects in a static environment, removing the static background and detecting the moving objects can provide an excellent first step. In this project multiple spatial and temporal filtering techniques are implemented in C++ to detect motion in image sequences with static backgrounds. In particular, absolute difference and Prewitt temporal masking are implemente and compared to a derivative of a gaussian filter.

Mobile Robotics Project Github

We designed a robot for environment mapping and April tag recognition, this included writing the custom code for mapping from Laser scans, using frontier exploration. Our work included custom mapping modules and a novel approach for camera-to-1D lidar calibration, a challenging problem due to the lidar's dimensionality. Leveraging linear algebra, we solved a non-linear least-square optimization problem, significantly reducing calibration errors.

Mobile Robotics Algorithms Github

Implementing some of the algorithms from the Mobile Robotics Course. These include A* Path Planning, Particle Filter, and Iterative Closest Point.